Yesterday I needed to plot locations on a driving circuit. Ten minutes later I had a professional grid overlay on a high-resolution site map. I didn’t write a line of code. I didn’t install software. I just described what I wanted.

This is the new normal.

The problem I was solving

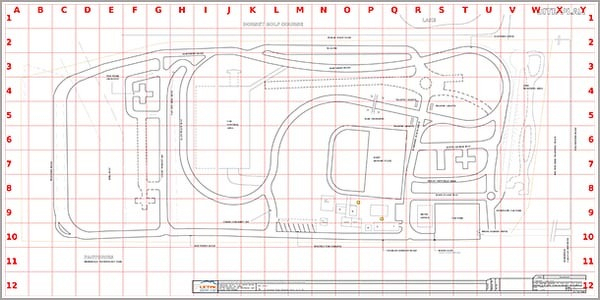

I needed to reference specific locations across the road circuit map. Describing spots as “near the northern golf course entrance” or “by that building on the east side” gets ambiguous fast.

The solution was obvious: a grid system. Like a street directory. Column letters across the top, row numbers down the sides. Then any location becomes a simple coordinate.

Great idea. No ability to execute it myself.

The method

I asked Google Gemini for a Python script to overlay a grid on an image. It gave me exactly what I needed: PIL for image manipulation, a function to draw lines and labels, parameters for a 25×12 grid with columns A-Y and rows 1-12.

The script was clean, well-commented and specific. But here’s the thing: I don’t write Python. I definitely don’t run command-line tools. I could read the script and understand what it should do. Actually making it work? That’s usually where ideas die.

The execution

I handed the script to Claude Cowork.

What happened next is where this gets interesting.

Claude read the script. Identified it needed PyMuPDF to handle PDF conversion and PIL for image processing. Installed both packages. Converted my PDF site map to a high-resolution JPG. Applied the grid overlay with proper font handling and label positioning. When the labels weren’t visible the first time, it diagnosed the font issue, rewrote the script with better fallbacks and white backgrounds for contrast, and regenerated the output.

All of this happened in the command-line interface. All of it without me knowing how to do any of it.

The grid worked first try after the font fix. The output was professional. 25 columns, 12 rows, red lines, clear labels on all sides. Exactly what I’d imagined but had no idea how to create.

What this means

The limiting factor has shifted from technical skill to imagination.

I could think of the solution. That used to be the easy part, with execution being the hard part. Now execution is trivial if you can describe what you want clearly.

I’ve used AI tools for writing, research and analysis. This felt different. This was the full stack: concept to working output, crossing multiple technical domains, handling errors and iterating to quality. The barrier between “I want this” and “here it is” has collapsed.

You don’t need to know Python. You don’t need to know which libraries to use. You don’t need to understand package management or CLI syntax or image processing. You need to know what you want and explain it clearly.

That’s not a small shift. That’s the difference between ideas staying as ideas and ideas becoming real things.

The script is sitting there now, ready to reuse. I could modify it, apply it to different images, change the grid dimensions. I still don’t know Python. I don’t need to.

If you can think it clearly enough to describe it, AI can probably do it. The question isn’t whether the tools are ready. It’s whether you’re ready to test how far imagination can take you.